We’re proud to share our innovations at ACM SIGGRAPH 2023, the premier conference for computer graphics and interactive techniques, taking place Aug. 6-10 in Los Angeles. During the event, our researchers will present at six technical paper sessions, two Frontiers Workshops, two Real-Time Live events, and a poster session. Our team will be available throughout the conference to discuss Roblox and our research at our booth. We’re also grateful to the committee for featuring our work in the highly selective technical papers trailer.

Roblox Research pursues the fundamental science of technology for our social 3D platform, with the goal of connecting a billion people with optimism and civility. We advance 3D content creation, physical simulation, and real-time moderation using a combination of first principles and artificial intelligence (AI) techniques. Our newest work enables hair, fabrics, objects, and landscapes to react to movement, collisions, and wind just as they would in the real world. Because we support a global platform with hundreds of millions of users, every aspect must scale on both the client and server-side and support any device from older phones to the latest AR/VR headsets. Read more about the work we’ll be presenting below, and see a schedule of where to find us at SIGGRAPH.

Optimized Rendering

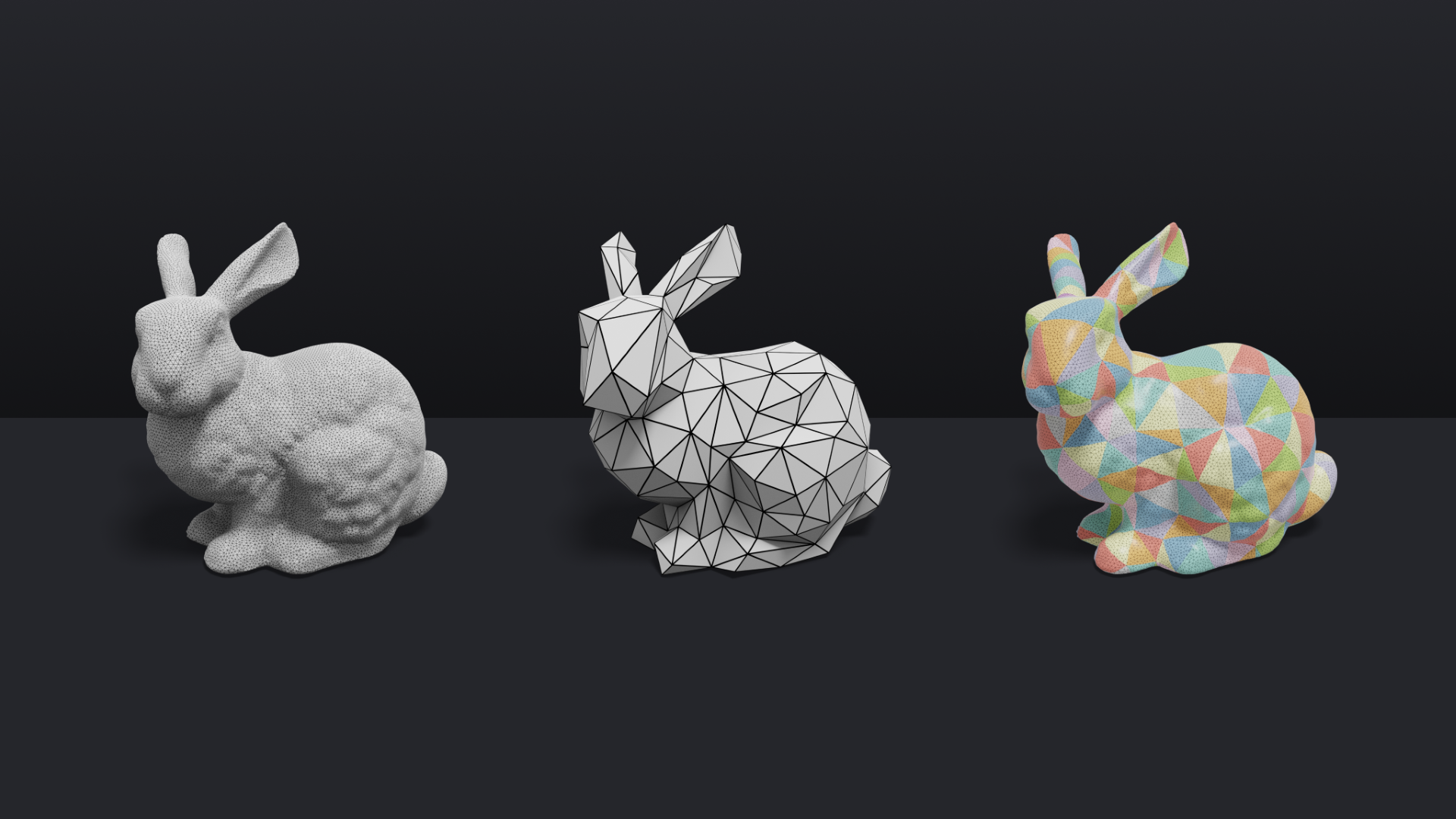

As 3D worlds become more realistic, structures and terrains become increasingly complex. Rendering these complex environments requires solutions for real-world effects like rippling or twisted fabrics, uneven terrain, etc. In their presentation, Surface Simplification Using Intrinsic Error Metrics, Roblox researcher Hsueh-Ti Derek Liu and colleagues from Carnegie Mellon University and University of Toronto, propose a method to simplify intrinsic triangulations. Classic mesh simplification preserves an object’s appearance for rendering purposes, but what if the goal is simulation, where objects can be twisted, bent, or folded? This method simplifies the mesh for simulation by exploring the vastly larger space of intrinsic triangulations, leading to more than 1,000x faster results on common tasks such as computing geodesic distances. This novel method could contribute to greater levels of detail for simulation.

Liu will also present work on Differentiable Heightfield Path Tracing in collaboration with colleagues from George Mason University, the University of Toronto, and the University of Waterloo. This enables fast, realistic rendering of terrain, shadows, and 3D objects for AI training applications. This approach achieves real-time frame rates, orders of magnitude faster than most existing 3D mesh differential renderers. It unlocks the possibility for interactive inverse rendering applications, including future versions of our generative AI and moderation AI tools. The researchers demonstrate this method with many interactive tasks, such as terrain optimization and text-based shape generation.

Realistic Motion for Avatars and Beyond

Human movement is complex and widely varied. Our goal is accurately reproducing this in all of its fidelity for realism and expression in virtual environments, which is a great challenge. Multitasking, or combining different behaviors seamlessly, for example, is something humans are very adept at. Traditional computer graphics focuses on single behaviors such as walking or throwing, and requires explicitly authored combined behaviors.

In their paper, Composite Motion Learning with Task Control, Roblox researchers Victor Zordan and Pei Xu, along with colleagues from Clemson University and the University of California, Merced, outline a new reinforcement learning approach for multitask motion control. This research proposes a reinforcement learning approach for complex task-driven motion control for physically simulated characters. With this multiobjective-control approach, characters can perform composite multitask motions, like juggling while walking. They can also combine a wide array of other activities without explicit reference motion examples of the combined behavior. This approach also supports sample-efficient training by reusing existing controllers.

Simulated hairstyles present another movement challenge in immersive 3D environments. There, carefully designed and styled hair models may immediately collapse under their own weight, unable to maintain their intended shapes against the pull of gravity. Sag-free Initialization for Strand-based Hybrid Hair Simulation, presented by Roblox researcher Cem Yuksel and colleagues from LightSpeed Studios, proposes a new initialization framework for strand-based hair systems. This work eliminates sagging by solving for the internal forces that hair must exhibit to preserve its shape with gravity and other external forces. This is accomplished without unnecessarily stiffening the hair dynamics and by considering strand-level collisions. This paper was also awarded a Best Papers Honorable Mention.

Roblox is a simulation-based 3D platform, in which the primary interactions between objects and with avatars are mediated by first principles physics instead of explicit code. This year we share two new results on different advances in object simulation.

Alongside colleagues from UCLA, University of Utah, and Adobe Research, Yuksel will present Multi-layer Thick Shells, which proposes a novel approach to allowing thickness-aware simulations for materials like leather garments, pillows, mats, and metal boards. This approach avoids shear locking and efficiently captures fine wrinkling details and opens the door to fast, high-quality, thickness-aware simulations of a variety of structures.

Another movement challenge in simulations comes when objects collide and separate. In the real world, objects are generally able to collide and pull apart again — still as two distinct shapes. Yuksel, with colleagues from the University of Utah, will present a method for efficiently computing the Shortest Path to Boundary for Intersecting Meshes from a given internal point. It offers a quick and robust solution for collision and self-collision handling while simulating deformable volumetric objects. This allows simulating extremely challenging self-collision scenarios using efficient techniques. An example is XPBD, which provides no guarantees on collision resolution, unlike computationally expensive simulation methods that must almost maintain a collision-free state.

Interactive Events

Everything on the Roblox platform is interactive and in real time. The best way to experience our new advances is to see them in action through live demos and our Real-Time Live sessions. At Roblox Generative AI in Action, researchers Brent Vincent and Kartik Ayyar will demonstrate how creators can leverage natural language and other expressions of intent to build interactive objects and scenes without complex modeling or coding. At Intermediated Reality with an AI 3D-printed Character, Roblox’s Kenny Mitchell and 3Finery Ltd’s Llogari Casas Cambra will showcase an AI model that processes live speech recognition and generates responses as a 3D-printed character — animating the character’s features in sync with the audio.

Roblox scientists will also be speaking on panels at the Frontier Workshops. At Beyond IRL, How 3D Interactive Social Media is Changing how we Interact, Express, and Think, Roblox scientists Lauren Cheatham and Carissa Kang will join domain experts, social scientists, and behavioral researchers to discuss how immersive virtual environments can help shape attitudes and behaviors, support identity exploration, and help younger generations establish healthy boundaries that can extend to their real world. At Expressive Avatar Interactions for Co-experiences Online, Roblox scientists Ian Sachs, Vivek Virma, Sean Palmer, Tom Sanocki, and Kenny Mitchell, along with other experts, will discuss challenges and lessons learned from deploying experiences for remote interactivity and expressive communication to a large global community.

Adrian Xuan Wei Lim will host a Poster Session to present Reverse Projection: Real-Time Local Space Texture Mapping, a novel projective technique designed for use in games that paints a decal directly onto the texture of a 3D object in real time. By using projection techniques that are computed in local space textures and outward looking, creators using anything from low-end Android devices to high-end gaming desktops can enjoy the personalization of their assets. This proposed pipeline could be a step toward improving the speed and versatility of model painting.

See all of the papers the Roblox Research team will present at SIGGRAPH 2023 here. Visit our booth and sessions, listed below. We look forward to meeting you in person!

Sunday, Aug. 6

Monday, Aug. 7

Tuesday, Aug. 8

Wednesday, Aug. 9

Thursday, Aug. 10